Introduction: Demystifying the Machine Mind in Medicine

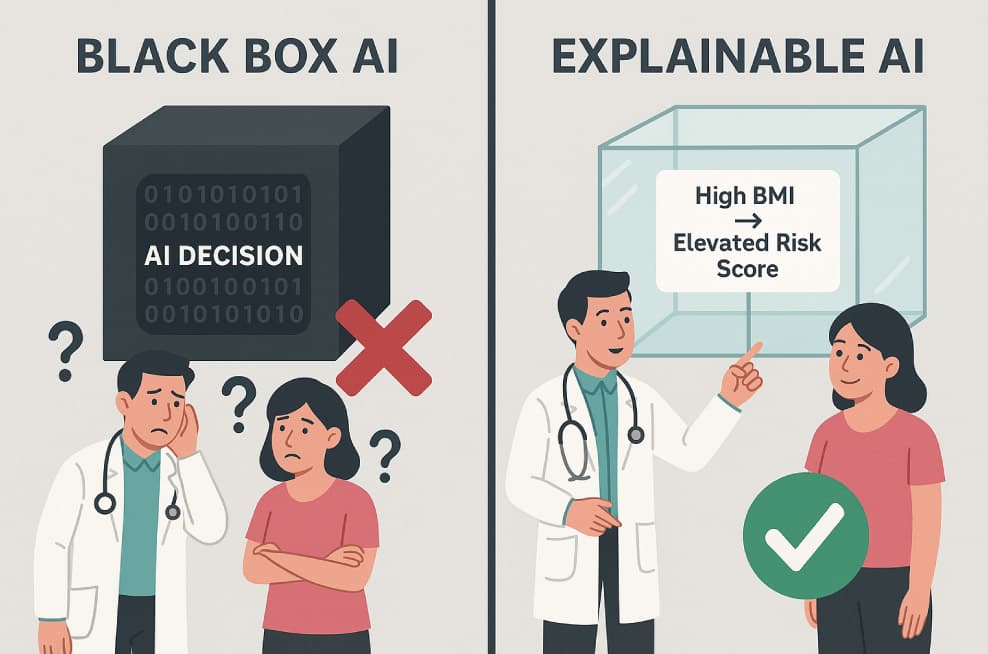

In today’s fast-evolving healthcare landscape, AI in healthcare is no longer a futuristic concept—it’s a present reality. From predicting disease to reading radiology scans, AI is making remarkable strides. But there’s a problem: many of these systems are “black boxes”—they deliver results without showing how they got there.

This lack of transparency raises ethical and clinical concerns. That’s where explainable AI in medical diagnostics comes in. It aims to open the black box, allowing clinicians and patients to understand the “why” behind AI-driven decisions.

What is Explainable AI in Medical Diagnostics?

Definition and Context

Explainable AI (XAI) refers to AI systems whose decision-making processes can be understood by humans. In medical diagnostics, this means making the AI’s logic transparent to doctors, patients, and regulators.

Instead of opaque algorithms offering blind conclusions, explainable AI in medical diagnostics provides reasons—helping humans interpret, trust, and validate results.

The Rising Role of AI in Healthcare

From Support to Decision-Maker

AI tools now assist in:

- Radiology and pathology image analysis

- Risk prediction models for chronic diseases

- Genomic data interpretation

- Triage and clinical decision support systems

- While this enhances diagnostic accuracy, it also places immense responsibility on these tools—making explainability vital.

Why Black Box AI Fails Medical Standards

Trust Deficit in Patient Care

Doctors are trained to make evidence-based decisions. When AI outputs lack reasoning, it becomes difficult for clinicians to:

- Justify treatment plans

- Gain patient trust

- Ensure regulatory compliance

Why Black Box AI Fails Medical Standards

Why Black Box AI Fails Medical Standards

Why Black Box AI Fails Medical Standards

Legal and Ethical Implications

Why Black Box AI Fails Medical Standards

In a field where lives are at stake, using non-explainable models poses legal risks. If a patient is misdiagnosed, and the reasoning behind the diagnosis cannot be shown, who is accountable?

Benefits of Explainable AI in Clinical Decision Support

Improved Collaboration Between Doctors and AI

When AI decisions are interpretable, they complement clinical expertise—not compete with it. This enables:

- Faster diagnoses

- Higher clinician confidence

- Better communication with patients

Enhanced Patient Engagement

Patients are more likely to follow treatment plans when they understand the “why.” Transparent AI models build patient trust by delivering both answers and explanations.

Real-World Applications of Explainable AI in Diagnostics

Case 1: Radiology

Explainable AI tools like heat maps in imaging show which part of an X-ray influenced the AI’s diagnosis—empowering radiologists to verify results.

Case 2: Predictive Risk Models

In chronic disease management, AI that highlights which patient factors (e.g., BMI, blood sugar) led to a prediction makes it easier for clinicians to adjust treatments.

Case 3: Oncology

In cancer diagnosis, explainable AI systems help prioritize biopsy results or treatment plans, making the decision-making process visible and verifiable.

Challenges in Implementing Transparent AI in Healthcare

Complexity vs. Simplicity

The more powerful the model (e.g., deep learning), the harder it is to interpret. Simplifying it without losing performance is a delicate balance.

Clinical Workflow Integration

Explainability must be integrated into existing hospital systems without slowing down the diagnostic process. It must feel intuitive, not technical.

Standardization and Regulation

There is currently no universal framework for what counts as “explainable” in AI. Regulatory bodies are working toward consistent benchmarks.

Future of Medical Diagnostics: Ethical and Scalable AI

The future isn’t just about more intelligent machines—it’s about transparent AI that can scale ethically across global health systems.

What’s Next?

Human-in-the-loop systems to validate AI reasoning

AI literacy training for clinicians

Cross-disciplinary collaboration between data scientists, ethicists, and healthcare providers

Global policies ensuring fairness and interpretability in diagnostic tools

By embedding explainable AI in medical diagnostics, we move from blind automation to collaborative intelligence—preserving humanity in high-tech healthcare.

Conclusion: The Age of Enlightened AI in Medicine

The promise of AI in healthcare will only be realized when it’s as transparent as it is intelligent. The black box must give way to clarity, collaboration, and compassion.

Explainable AI is not a technical upgrade—it’s a moral and clinical necessity for modern medicine. In the diagnostic process, understanding how matters just as much as what.

FAQs:

1. What is explainable AI in medical diagnostics?

It refers to AI systems in healthcare that offer understandable reasons behind their diagnostic decisions, allowing medical professionals to interpret and validate the outputs.

2. Why is explainability important in AI-based medical tools?

It improves diagnostic accuracy, builds clinician and patient trust, ensures accountability, and aids in regulatory compliance.

3. How does explainable AI differ from traditional AI in healthcare?

Traditional AI (black box models) provides results without insight into how they were reached. Explainable AI offers interpretable, transparent outputs aligned with clinical thinking.

4. What are some real-world uses of explainable AI in diagnostics?

Examples include AI-assisted radiology with visual heatmaps, chronic disease risk prediction tools, and oncology diagnostics offering ranked treatment options with explanations.

5. What challenges does explainable AI face in healthcare?

Major challenges include balancing performance with transparency, integrating into clinical workflows, and creating standardized regulations for interpretability.

2 Responses

Hello

Hello

How I can help you?